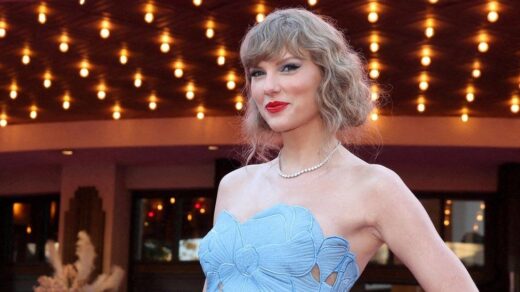

Social media platform X has taken action to block searches for Taylor Swift after explicit AI-generated images of the singer started circulating on the site. X’s head of business operations, Joe Benarroch, described this as a temporary measure to prioritize safety. When users search for Swift on the site, they receive an error message and are prompted to reload. The fake graphic images gained widespread attention and were viewed millions of times, causing concern among US officials and fans of the singer. Swift’s fans flagged the posts and accounts sharing the fake images and flooded the platform with real images and videos of her using the hashtag “protect Taylor Swift.” In response, X released a statement stating that posting non-consensual nudity is strictly prohibited on their platform, and they are actively removing all identified images and taking appropriate actions against the responsible accounts. It is unclear when X started blocking searches for Swift or if they have done so for other public figures in the past. The White House has expressed alarm over the spread of AI-generated photos and called for legislation to address the misuse of AI technology on social media platforms. US politicians have also called for new laws to criminalize the creation of deepfake images. In the UK, sharing deepfake pornography was made illegal under the Online Safety Act in 2023.